original scene

Abstract

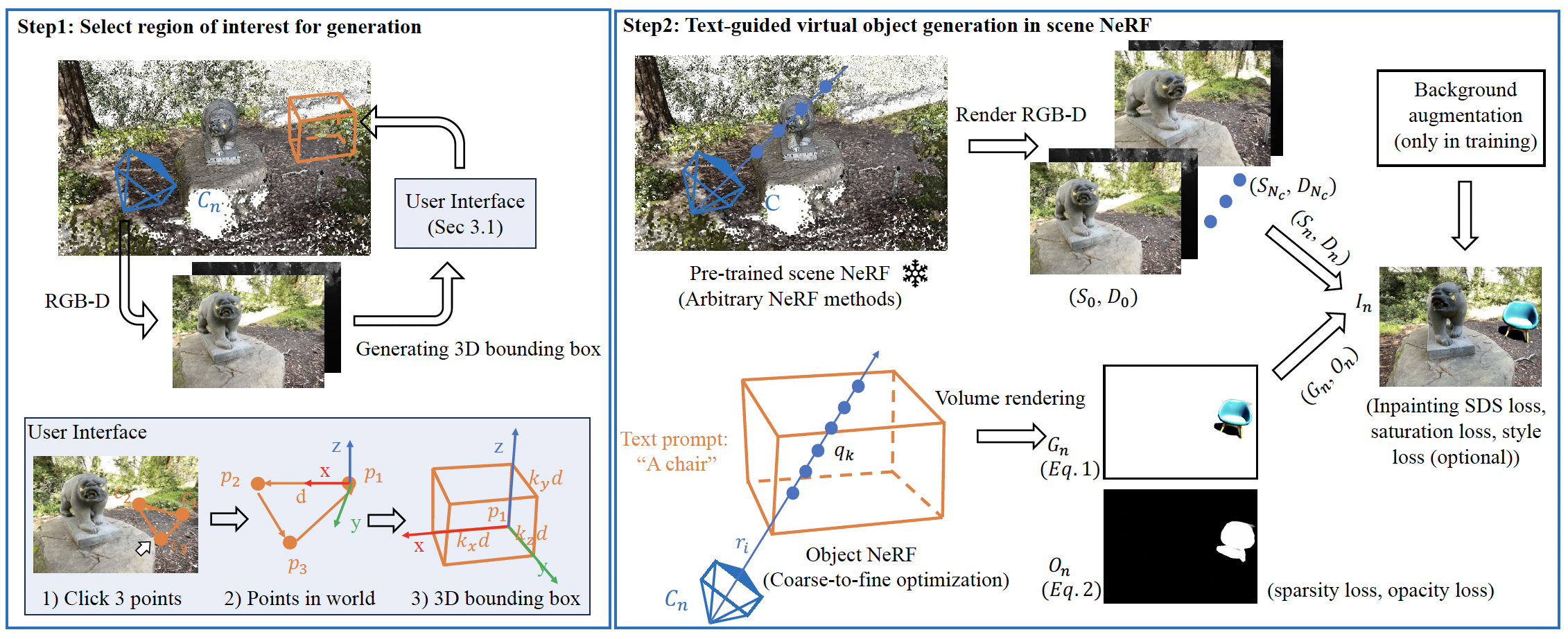

Despite advances in 3D generation, the direct creation of 3D objects within an existing 3D scene represented as NeRF remains underexplored. This process requires not only high quality 3D object generation but also seamless composition of the generated 3D content into the existing NeRF. To this end, we propose a new method, GO-NeRF, capable of utilizing scene context for high-quality and harmonious 3D object generation within an existing NeRF. Our method employs a compositional rendering formulation that allows the generated 3D objects to be seamlessly composited into the scene utilizing learned 3D-aware opacity maps without introducing unintended scene modification. Moreover, we also develop tailored optimization objectives and training strategies to enhance the model’s ability to exploit scene context and mitigate artifacts, such as floaters, originating from 3D object generation within a scene. Extensive experiments on both feed-forward and 360^o scenes show the superior performance of our proposed GO-NeRF in generating objects harmoniously composited with surrounding scenes and synthesizing high-quality novel view images. Code will be made publicly available.

Pipeline

Left: a user-friendly interface where the ROI for generation can be specified by clicking three points on the image plane. Right: a compositional rendering pipeline that seamlessly composites generated 3D objects into the scene neural radiance field.

Results on the same scene with different text prompts

"basket of fruits"

"a pumpkin"

original scene

"a plush toy Pikachu"

"a traffic cone"

original scene

"a stone"

"a stone chair"

original scene

"a wooden boat"

"a boat"

"a puppy standing on the ground"

"a backpack"

"a red hydrant"

Results on the same text prompt with different scene styles

"a cat"

"a cat"

"a cat"

More Results